Article

What we learnt at OpenAI’s annual developer conference and why it matters to you

Published

9 October 2025

Last year, I wrote “Inside OpenAI’s Exclusive DevDay” to translate developer news into business stories. Since then, Implement has used the capabilities of what was presented with our clients to push boundaries and set new standards.

The most remarkable thing is that features presented at DevDay have a tremendous impact on all of us, not only developers. This year, artificial intelligence advanced yet another level with the release of OpenAI’s agents. With such rapid progress, humanity is still on track – and even ahead of trajectory – to achieve artificial general intelligence in just two years.

What changed at DevDay 2025 – and why it matters:

- AgentKit with Agent Builder: Build multistep, tool-using agents on a visual canvas. Crucially, the evaluation engine now picks up traces of where the AI might have gone off track. As a user, you simply annotate what output you were expecting or why you think it failed, and it will automatically update the agentic flow. It also includes plug-and-play guardrails to detect jailbreaks and to mask or flag PII, helping you stay compliant.

- Apps in ChatGPT: A new Apps SDK lets you deliver your application directly inside ChatGPT. This is effectively a new distribution channel without building a separate UI.

- Sora 2 in the API: Video generation has taken a big step forward. The newest model not only produces higher-quality video but also adds a realistic soundscape and even speech. OpenAI demoed a not-yet-released Storyboard solution they built in just 48 hours. It takes hand-drawn sketches of a scene, a library of artifacts, and user-selected landscapes, then generates videos that allow animators to quickly iterate on storyline ideas.

- Codex full release: OpenAI’s coding agent is now generally available. Internal data shows that 92% of technical staff use it daily, teams are committing 70% more features per week, and 100% of code releases are reviewed by Codex.

You should start building agents today

Generative AI is a democratised technology like no other – everyone can take part in the creative process of mapping use cases. At OpenAI, teams use a simple four-step framework to automise back-office tasks.

In a closed-door session, they shared their process for identifying and developing use cases that both improve operational efficiency and enhance the quality of their work:

- Find a high performer within a back-office task.

- Map their workflow. What signals inform decisions? Which tools fetch them? Make implicit steps explicit.

- Co-build in Agent Builder. Sit with the SME. Start from a minimal flow and connect only the tools the human uses. Ship to a small group of testers.

- Evaluate with real human inputs: use actual tickets, emails, or forms – not hypotheticals. After a period in production, review trace failures with the SME, annotate and grade them, then click Optimise. Rerun evaluations to confirm the uplift before widening the rollout.

The next step is to add guardrails for GDPR/PII compliance, jailbreak detection, and groundedness checks before releasing it to the wider organisation.

Breakthrough use cases from the day

- Financial services: The global investment firm Carlyle used OpenAI’s evaluation platform to automate company assessments. They report over a 50% reduction in the time required to develop a new agent, along with a 30% increase in accuracy. This approach generalises to any standardised research synthesis, vendor risk reviews, RFP screening, or market briefs.

- Customer service: Build a resolution-focused agent using if/else branching, data lookups, and human escalation. Define quality signals in plain language – such as tone, reply length, and resolution confidence – and let the system flag traces for SME review. Then optimise based on that feedback. The goal is not deflection, but faster, safer resolution with a measurable learning loop.

If you end up deploying customer-facing AI, the Apps SDK puts your application directly inside ChatGPT, allowing users to call your services directly in chats.

Demo: A hands-on article writing agent

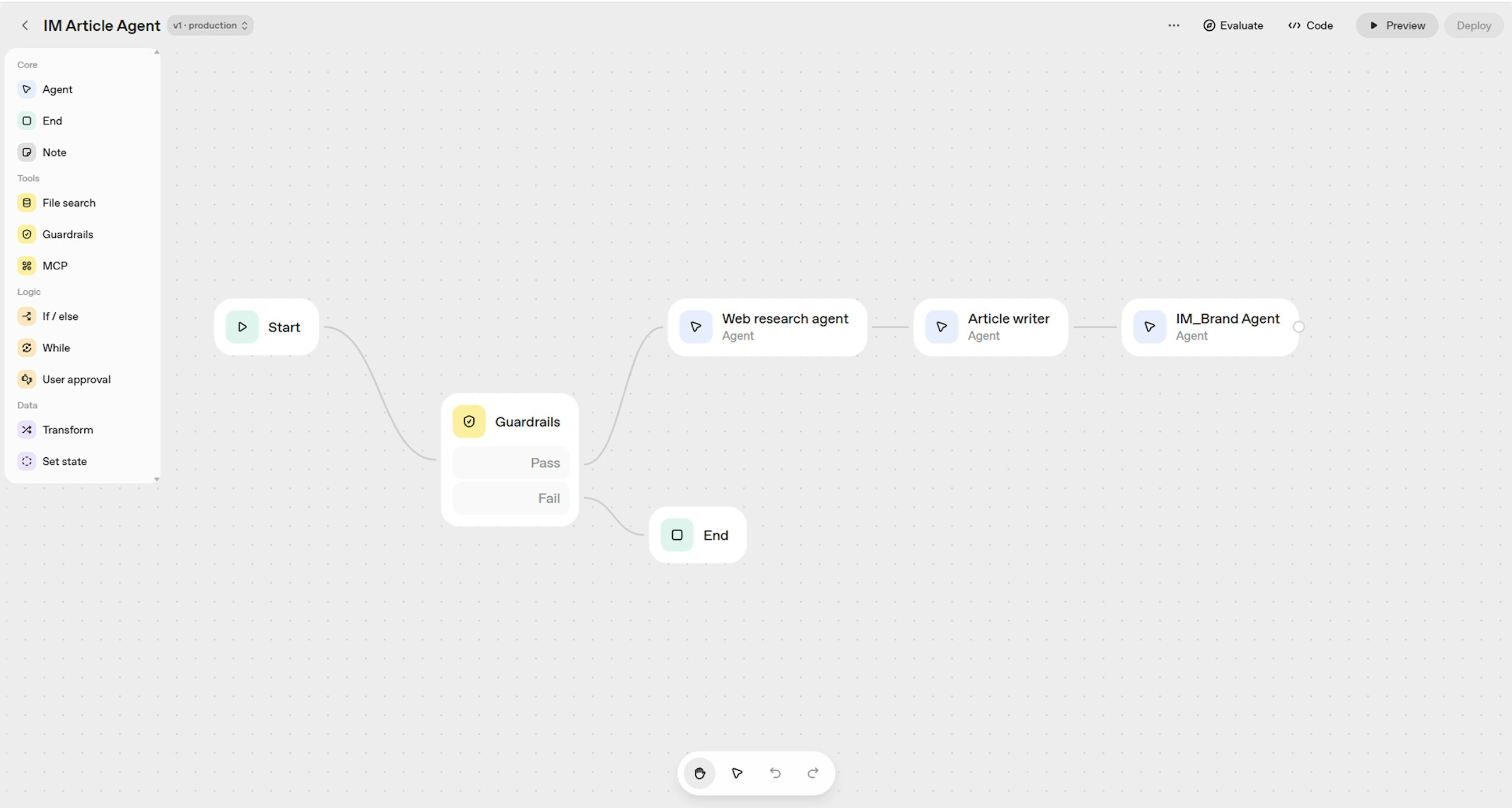

We tested Agent Builder to create an Article agent capable of producing content like what you have just read. The agent takes a written input and files, research online sources, pulls related Implement articles, drafts the article, and reviews it against Implement brand guidelines.

My actual agent flow: it took less than 20 minutes to build, prompt, and test, and I could follow how the agents passed information to each other before delivering the final output.

Next for this demo, I plan to strengthen guardrails (jailbreak/PII), define evaluation parameters aligned with our editorial standards, and share it with colleagues for early user testing. The goal is not perfection on day one, but steady improvements grounded in real user input.

Agent-first is now a feasible operating model

Agent Builder addresses the main blockers that halted many agentic pilots: building cost, measuring quality, and pinpointing where failures occur.

My advice is to start small: pick one back-office workflow, co-build with a high performer, evaluate using real inputs, and let traces guide improvements.

Think of AI less as chatbots and more as electricity – quietly and reliably providing intelligence to every task that can be standardised, measured, and improved.

Next year, we will be exploring self-improving and innovative agents. See you then.

Any questions?

Related0 4

Article

Read more

Closing the strategy execution gap

Prioritise business outcomes, foster inclusive governance, and align rhythm across strategy execution.Article

Read more

Contracting for AI impact

Turn GenAI and automation into measurable value in your outsourcing dealsArticle

Read more