Article

A practical guide to improving your organisation’s data governance and making sense of untamed information.

Published

6 November 2025

As AI and machine learning propel us into the next era of digitalisation, mastering your data has never been more important. Strong data management underpins how well an organisation drives informed decisions across both strategy and operations.

But connecting performance and information is nothing new. The real challenge lies deeper: in the data that powers every decision. As the world becomes increasingly digital, data pours in from every direction. With IoT linking countless devices, we are flooded with everything from clean, structured information to scattered, disconnected fragments. The question is: how do you tame this tsunami?

Three pillars to master data governance

Strong data governance is built on three pillars: people, processes, and technology. As each evolves in its own way, regular review is essential to spot new opportunities and challenges – and to keep your organisation ready for the demands of the digital age.

People

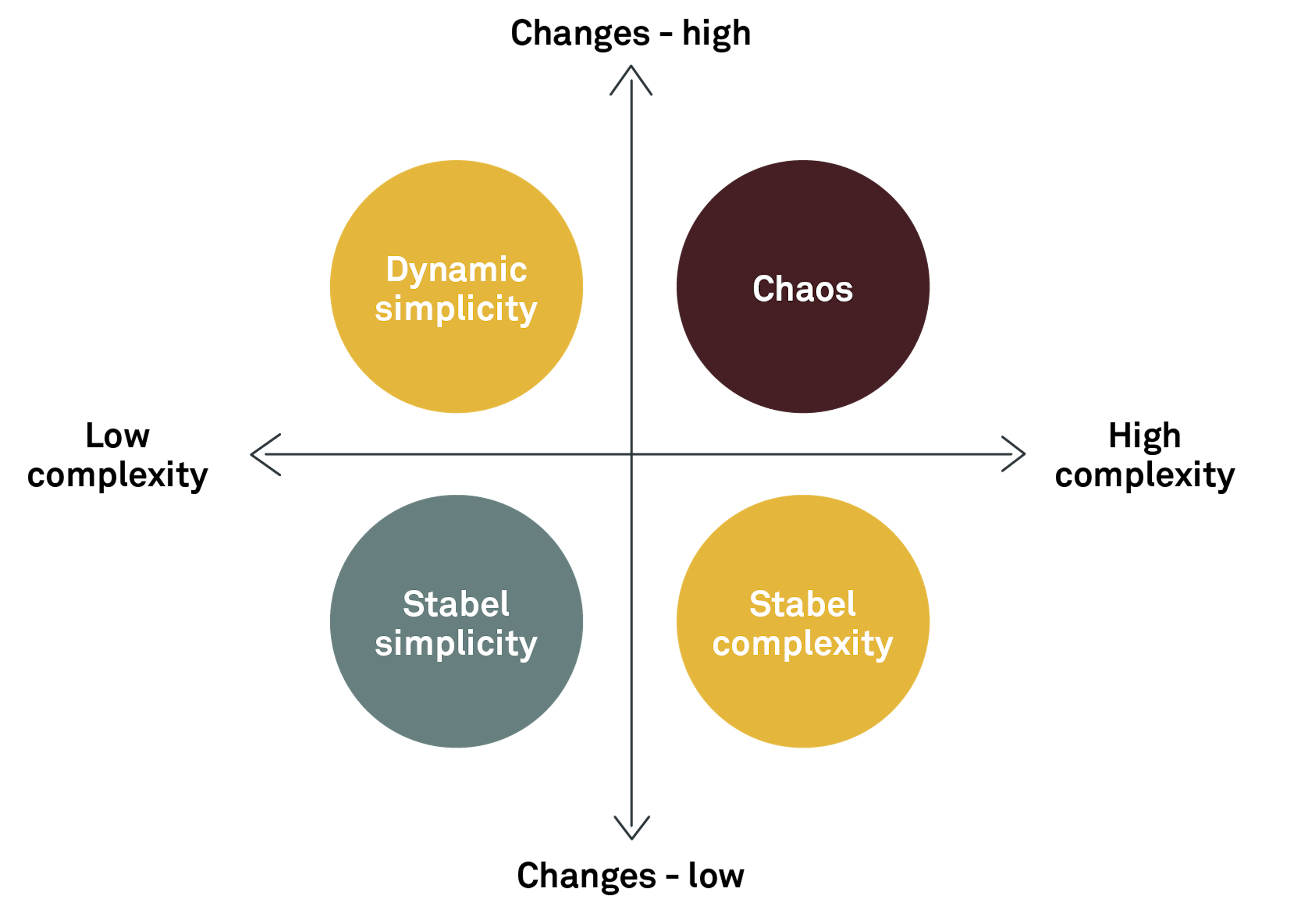

Like any other element, the first pillar – people – needs to be nurtured within the environment they operate in. Two key dimensions (shown below) often have a direct influence on behaviour in this space. When these become unbalanced, the pillar can weaken, leading to disruption or resistance driven by human limitations. But when well supported and cultivated, it thrives – and when it thrives, it strengthens the organisation by enabling it to adapt effectively to change

Change and complexity: Navigating the people pillar of data governance

While human nature drives us to explore the unknown, people – creatures of habit, whether we like it or not – typically avoid areas characterised by high complexity and constant change. This tendency arises from the increased cognitive load associated with complex or unfamiliar tasks.

Together, data and people create a complex landscape. Within organisations, those who truly understand intricate information often become labelled “experts.” These experts usually emerge during stable periods, when legacy systems are built around business-centric features – think Windows or SAP. Over time, such platforms have evolved far beyond their original design, extending into nearly every area of operations. As complexity grows, so do these experts who must adapt continuously to keep pace.

Experts are catalysts for progress, but only when they are recognised as such and included accordingly. With the right support, they can bridge technology and business, keeping complexity in check as organisations evolve.

To unlock the full value of your people, give them forums to explore where technology and business meet – and, most importantly, listen. When an organisation runs in constant chaos, mental overload follows, and progress stalls. And if it goes on too long, people start asking the hardest question of all: “Why does it matter?”

If people are not properly integrated into complex data processes – or supported in the environments they work in, data enablement will falter. Bring your people and experts together around the business areas that matter most, considering the pace of change and complexity they face. Start where the data challenges are greatest and create consistent forums for collaboration to reduce volatility and keep progress on track.

As your organisation and people grow, consider assigning a dedicated expert to track the latest developments in data, AI, and technology – and to guide your organisation’s data strategy. Creating space for stability and learning in these complex domains greatly improves the likelihood of success for any data initiative.

In short, people are among your most agile and powerful levers for managing data, if supported effectively. Understand what they need to succeed, provide structured forums for sharing insights, and reduce time spent in chaotic states so the organisation can focus on root causes rather than symptoms.

Processes

The second pillar, processes, is often overlooked as an area for improvement. With so many tools available to link activities, it is essential to keep the goal of each task clearly in mind. In the regulatory space, for example, a process must follow specific steps and be validated to ensure compliance, guaranteeing the desired outcome every time the task is completed. So why do processes remain such a challenge? Is it misalignment among the people involved, or does the process itself become lost in execution?

Errors are, of course, always a risk, even in the most robust processes. Machines will follow instructions without question, but that consistency comes with potential pitfalls. Assembly lines illustrate this well: the same task is repeated continuously, allowing businesses to increase speed and reduce costs – yet the risk of malfunction remains, and defective products may need to be discarded.

Novo Nordisk provides a real-world example. Their production line for Ozempic is distributed globally, with scaling still ongoing to meet demand. In their “engine room,” engineers, lab workers, and scientists are on constant standby to intervene if issues arise, because the cost of downtime quickly outweighs the expense of maintaining support staff.

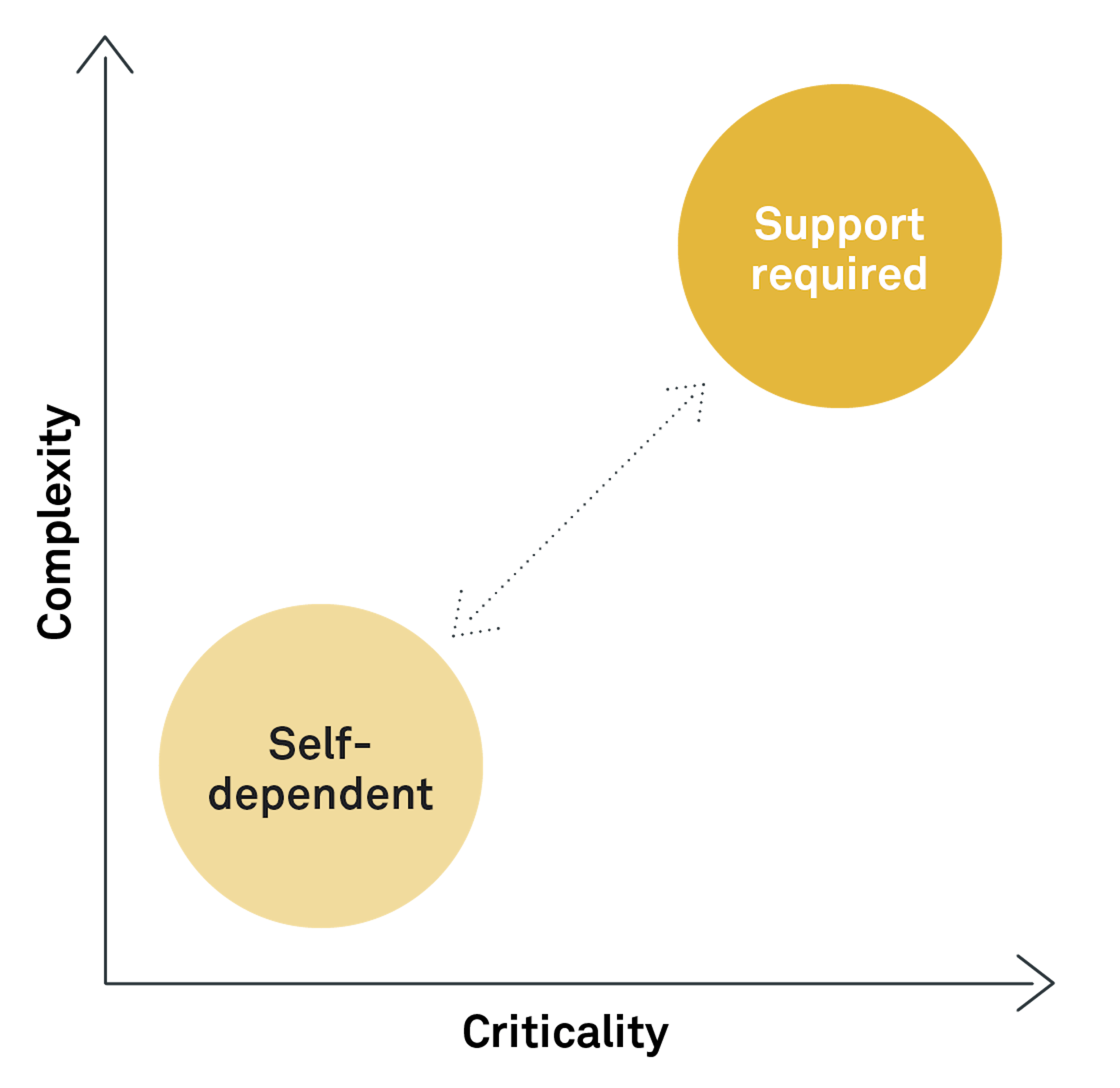

So why is this approach not standard in other businesses? When a process fails, a support function should be ready to intervene during downtime. In many organisations, standardised support systems have replaced hands-on assistance, leaving users to wait for someone with the expertise to resolve the issue

This can work for tasks of low complexity or importance, where helpdesks and standard procedures are sufficient. But for more critical or complex processes, knowledgeable staff who can step in whenever the situation demands are essential.

Processes should be designed with these considerations in mind. Some may be as simple as exchanging emails, while others involve transferring detailed information in a structured, complex sequence. Exchange points within a process should be monitored according to the importance of the information being shared. When challenges arise, it should be possible to trace where the process broke down and redesign it based on observations.

To understand and improve a process, it must first be clearly defined. According to Implement’s playbook for business process transformation, a process “is a series of actions or steps taken to achieve a particular end. It involves transforming inputs into outputs using resources such as competencies and materials.”

Recognised methodologies such as Six Sigma and Lean start with defining processes clearly and tailoring them to deliver value, with the ability to quantify quality, time, and output. But how often are processes actually reassessed to identify opportunities and risks? In reality, most organisations focus so heavily on day-to-day operations that legacy processes receive little attention – until something goes wrong, that is.

Processes often evolve by adding steps over time, which can work for tasks of low complexity or criticality. However, this approach carries an inherent risk: unnecessary complexity may be introduced without justification. The result is often an over-complicated process that can fail dramatically with significant consequences, if not managed and controlled promptly, particularly in non-regulated areas.

Designing and optimising a process should always start with its underlying purpose i.e., the ever-important “why.” Regularly assess a process’s complexity, importance, and need for support. Check in with the process owner or, if none exists, those closest to the work – to ensure the value proposition remains the key justification for its existence. If it has drifted off course, it is likely time for an adjustment. Every process has an input and an output, and in the world of data, the flow of information can be just as critical as a conveyor belt in a large-scale medical production facility.

Technology

The third pillar, technology, represents a vast landscape of opportunities. Just as YouTube’s daily uploads could take a lifetime to watch, the range of technologies available to manage data seems virtually endless. This also means that distinguishing what truly adds value has never been more challenging. Technology giants such as SAP, Microsoft, and Oracle now offer an array of advanced solutions, with AI modules emerging constantly. With tools like GPT and other AI innovations, it is easy to feel behind. Yet in reality, many organisations are still taking their first tentative steps on this journey, if they have begun at all.

While undeniably tempting, technology adoption is not without risk. Just as it was a major decision twenty years ago, it remains a critical responsibility today, especially if licences go unused because employees either do not know how to use them or simply choose not to.

A good rule of thumb is to evaluate costs and the potential business case before introducing new technology. Do not hesitate to create a simple business case that clearly demonstrates the value of adopting modern tools. Ensure the value proposition is well-defined, particularly if rapid progress is the goal.

The principle of “fail fast” applies when experimenting with uncertain outcomes, and testing emerging technologies is no exception. However, mastery of the technology should follow closely; otherwise, new tools risk being abandoned and left to gather dust like a forgotten relic. An example of this is the implementation of a smart tool for data linage control in a big Nordic bank (as a response to BCBS 239). While the tool itself was inherently integrable with the underlying technology (DB2), the users were left with little instructions and lack of attention from leadership. After three years, the tool was still not fully integrated, and it was decided to abandon the tool as the benefit realisation was not fully achieved.

Plan ahead, integrate insights into your business processes, and share information regularly – especially when it demonstrates value. Every technological investment should benefit your organisation; this is, in essence, the cornerstone of accelerated performance. If it does not, it is a hard truth that is rarely acknowledged early and the very reason so many large, costly IT projects fail.

Seek inspiration from external sources. Organisations such as Gartner Research (www.gartner.com) and DAMA International (www.dama.org) provide opportunities to join networks or attend conferences that showcase the latest trends. These platforms often highlight successful transformations and integrations, offering valuable guidance when embarking on your own technology journey.

If your organisation works with a technology service provider, leverage their guidance when exploring options. Choosing technology that integrates naturally with your existing ecosystem – such as Microsoft Fabric, Azure, or Power BI can deliver significant benefits, particularly for scalability and data management.

A common trade-off of large-scale data solutions is reduced agility once a platform is selected. Overcoming this challenge requires a balance of strong leadership, people skills, and technical expertise.

For some within your organisation, adopting modern technology can test resilience. The key to success is documenting the value created and building a positive narrative this is how you win support and generate momentum for your technology initiatives.

Where to go from here?

Now that you understand the three pillars of data governance, you can begin addressing the framework more holistically.

Imagine dedicated experts responsible for each pillar. Trusted advisors who guide you through the ocean of information at your organisation’s disposal. Picture them meeting in structured forums to discuss data usage, quality, and insights in alignment with business strategy. They communicate findings across the organisation, correct misinformation, and establish the principles and rules that govern data management.

Over time, your data becomes more accessible, transparent, and aligned with strategic objectives, supporting the vision of a truly data-driven organisation. While this may seem aspirational, it should remain the goal for any organisation seeking to harness the full power of its data.

Through effective data governance, you delegate ownership, manage processes, and define thresholds that maintain business momentum. Together, these elements provide a firm grasp of what drives your business forward – and where improvements can be made.

Sources

Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive Load Theory. Springer. https://www.emrahakman.com/wp-content/uploads/2024/10/Cognitive-Load-Sweller-2011.pdf?

Any questions?

Related0 4

Article

Read more

Closing the strategy execution gap

Prioritise business outcomes, foster inclusive governance, and align rhythm across strategy execution.Article

Read more

CFO Advisory #1: Unlock AI value in finance

Learn quickly, experiment often, and adapt faster than the competition.Case

Read more