Co-intelligence turns AI from a tool into a teammate – and transforms how organisations, teams, and individuals work

10 November 2025

AI does not dehumanise work – it rehumanises it. The paradox is that by delegating mechanical tasks to AI, people gain the freedom, safety, and space to be more human: creative, collaborative, and curious.

Imagine a team huddled around a challenge. They first brainstorm ideas on their own, empty their minds, sketch out possibilities, and explore every creative direction they can think of. Only after this initial stage do they bring AI into the room as a sparring partner. Ideas spark quickly, patterns emerge that no one had noticed before, and rough solutions take shape in minutes. The group then debates, refines, and pushes back on the AI’s suggestions together. What used to take weeks of disjointed effort transforms into a shared creative rhythm, where human judgement and machine insight amplify each other.

This is, in essence, what co-intelligence is all about: teams and AI working together, each amplifying the other’s strengths. It is not about replacing people with machines or merely automating tasks. Instead, it involves sparring, supervising, co-creating, and leveraging AI to verify, update, check, and corroborate information. Used this way, AI does not diminish the human role; it boosts both speed and quality while creating space for creativity and learning.

Pioneering teams around the world are already showing that AI can be more than a productivity booster. When used with care and curiosity, it becomes a companion that helps people learn faster, produce better work, and imagine solutions that once seemed out of reach. These teams are not only on top of it today – they are giving us a glimpse of how work will be transformed tomorrow.

The speed and impact of AI adoption depend less on the technology itself and more on how people, teams, and organisations work together. Even the most advanced AI tools cannot deliver value if collaboration is weak, experimentation is stifled, or decision-making remains siloed. Culture sets the pace – and those who move fast with AI are the ones who get their teams aligned, empowered, and curious from day one.

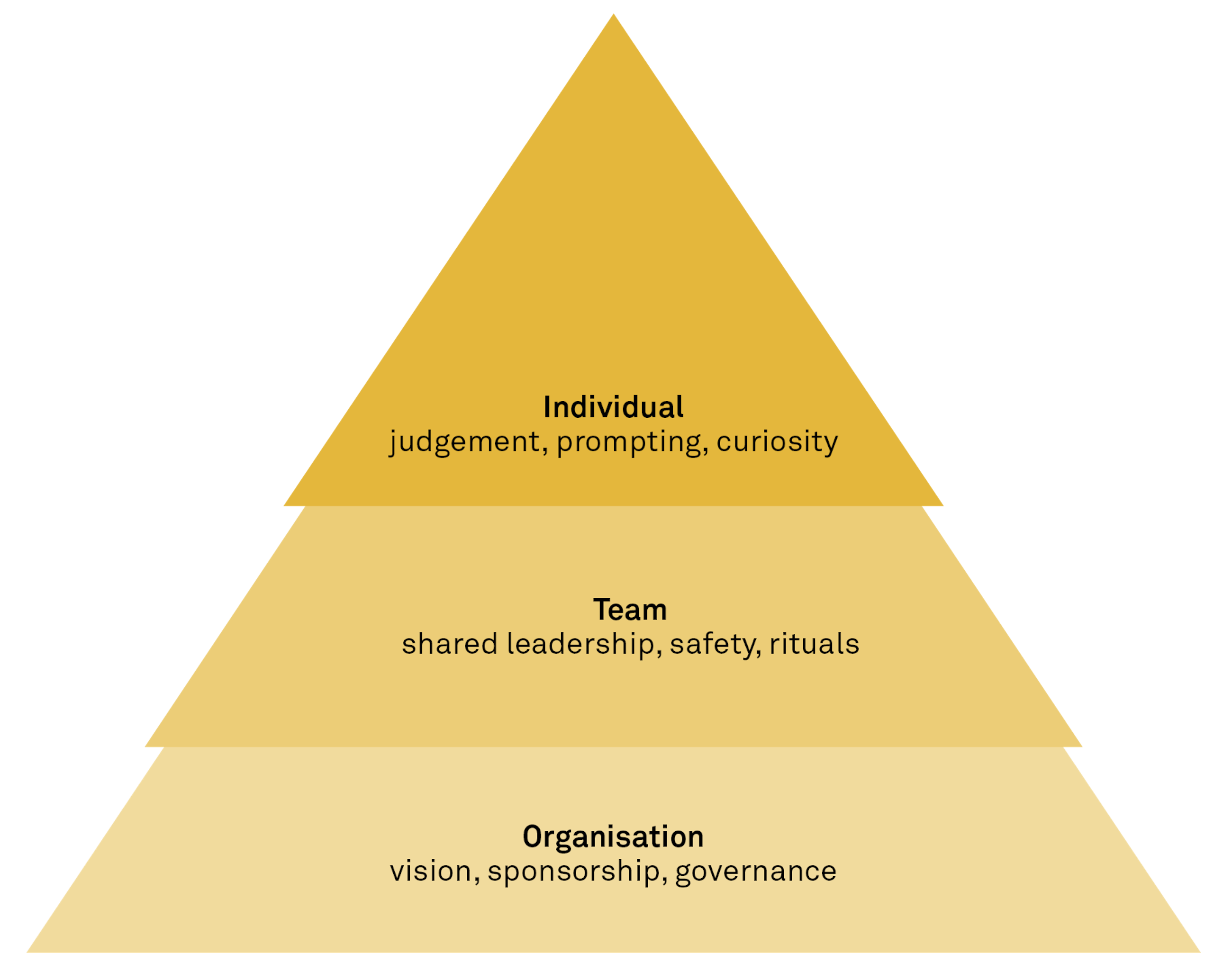

Triad of trust: Organisation, team, individual

Co-intelligence does not emerge on its own – and AI only moves as fast as your organisation. Our insights, drawn from hundreds of sessions with business executives and team leaders, show that it must be actively cultivated through the practices and commitments of organisations, teams, and individuals – in that order.

Co-intelligence

– the collaborative integration of human and machine intelligence to solve problems, make decisions, and create value more effectively than either could alone.

Meet an organisation

At a leading Nordic life sciences company, AI transformation began with a simple executive mandate: “Show value and the money will keep coming.” But even with that, leaders did more than approve initiatives from afar – they sponsored experiments from the lab floor to regulatory affairs, checking in regularly with a supportive “How can we help?” rather than a transactional “Show me the ROI.”

When a junior researcher proposed using AI to accelerate molecular screening, executives cleared bureaucratic barriers and connected them with the right IT resources. This commitment cascaded across the organisation: procurement teams began using AI for supplier risk assessment, clinical teams for data analysis, and regulatory affairs for document reviews. Within 18 months, scattered experiments became a full organisational capability, with every function discovering its own path to value creation. The key was not top-down control but leaders who made themselves available for guidance, removed obstacles, and celebrated both successes and instructive failures equally.

Organisation principles

What made this transformation possible? Successful organisations make deliberate choices to let co-intelligence thrive. In this example, the clear executive mandate provided direction and purpose, linking AI directly to strategy and operations. The pilot experiments – the molecular screening project, procurement risk analysis, and regulatory document review – illustrate how small initiatives can scale into company-wide practices, eventually embedding AI into the culture.1

Here, leadership presence is critical. The executives in the Nordic company not only approved initiatives but actively engaged, joining experiments, asking questions, and modelling responsible use. In any organisation, C-suite presence of this kind reassures teams that AI is deeply anchored and is the type of commitment that helps initiatives succeed.2

Frontier organisations complement strong leadership with inclusive adoption, which means that instead of leaving AI to a small group of experts, the company enables all teams – from lab researchers to procurement staff – to participate. By balancing top-down guidance with bottom-up momentum, vision and grassroots creativity reinforce each other, leading to adoption across functions.3

The Nordic company also recognised the importance of nurturing a culture of experimentation, giving people the space and psychological safety to test, fail, and learn openly.4 Teams operating in such cultures scale AI use cases faster and generate the energy that carries adoption forward.

Psychological safety

– the shared belief that a team or group is safe for interpersonal risk-taking, where people feel comfortable speaking up, asking questions, admitting mistakes, and challenging ideas without fear of embarrassment or punishment.

Meet a team

In the procurement function of a Danish energy trading company, five team members transformed their approach to supplier management by treating AI as their sixth team member.

During their weekly supplier review meetings, they began by having AI analyse contract terms across their portfolio, flagging risks and opportunities in real time. One member became the team’s ‘prompt architect,’ sharing templates that others adapted to their specific needs. Another specialised in validation, developing a checklist for verifying AI-generated insights against market knowledge. The result? A strong human-machine collaboration.

When evaluating a complex wind farm equipment tender, they used AI to synthesise technical specifications, market pricing, and supplier performance data. Work that once took days was now completed during the meeting itself. The breakthrough came when they stopped hiding their AI use and began opening every analysis with a “Here’s how we built this.” Junior members felt safe to experiment because seniors openly shared their own AI failures and learnings. The result: tender evaluation time dropped by 60% – but more importantly, the team uncovered patterns in supplier behaviour they had never noticed before, paving the way for more strategic partnerships.

Team principles

What made this transformation possible? High-performing teams make deliberate choices to let co-intelligence thrive. In this case, psychological safety was the foundation. Because AI tools are new and many use cases unfamiliar, people – and this includes leaders – need to feel safe showing the vulnerability that comes with asking ‘dumb’ questions or voicing uncertainties. Senior members of the procurement team modelled this openness by sharing both their successes and mistakes, setting a tone that encouraged experimentation.

Shared leadership also played a crucial role. When multiple members take responsibility instead of everything resting on one person, teams perform better. This distributed ownership builds confidence, makes collaboration more inclusive, and ensures that AI use is both responsible and embedded in daily work.5 High-performing teams cultivate psychological safety to support this kind of shared leadership – fostering an atmosphere where curiosity and critique feel natural. Consequently, teams with higher levels of psychological safety are proven to be more innovative and effective, underscoring the causal relationship between the two.6

Collective learning also played a central role. The team engaged in shared exploration – exchanging prompts, reflecting on what worked, and building competence together. The ‘prompt architect’ approach helped make AI use visible and practical for everyone, reinforcing the idea that AI capability grows through community, not hierarchy.

Equally important was openness around AI use. By tackling what might be called ‘GPT hesitancy’, the team turned potential discomfort into an opportunity for shared learning and growth.

When these conditions were in place, AI stopped being an add-on and became part of how the team thought, created, and delivered. The result was faster, more imaginative, and ultimately more human collaboration.

High-performing teams do not wait for perfect conditions – they experiment relentlessly, tackle broken processes first, and empower functional experts to co-create solutions. AI only accelerates what the culture already allows: curiosity, openness, and real-world testing.

GPT hesitancy

– the reluctance to use or visibly rely on generative AI due to fear of how others might judge your competence, credibility, or ethics.

Meet an Individual

On a renewable energy project, a project manager began using AI to prepare meeting agendas, draft risk logs, and compile status updates from multiple workstreams. What once took hours of collecting and formatting was now ready in minutes, complete with suggestions and previously overlooked details. The real work began in guiding, editing, and directing these drafts – a process that sharpened both the output and the manager’s own thinking.

With routine preparation delegated to AI, the manager could focus on reflecting on scenarios, facilitating workshops, and developing creative solutions, both individually and collaboratively with the team. AI acted as a capable partner, handling repetitive tasks and generating insights – but its input still required human scrutiny and validation, much like mentoring a junior colleague whose skills are still developing. By combining AI’s contributions with human oversight, the work became richer, more collaborative, and ultimately more meaningful.

Individual principles

What made this transformation possible? Individuals who thrive with co-intelligence cultivate both a new set of skills and a fresh mindset. They strengthen critical thinking by applying judgement, checking for bias, and validating outputs rather than accepting them at face value. This kind of evaluation prevents overreliance on AI suggestions and improves decision quality.7

They also master the art of delegation and context-giving, recognising that AI performs best when given clear instructions and sufficient background. The ability to brief effectively is not only central to achieving quality outcomes but also sharpens people’s own thinking in the process.8 In the case of the project manager, each prompt became a tool for reflection – clarifying objectives, framing problems, and testing assumptions before acting.

Equally important is openness in communication. Individuals who explain how they use AI – what worked, what did not, and why – help build capability across the organisation.9 Their willingness to share prompts and learnings turns isolated productivity gains into collective growth.

Curiosity and creativity also distinguish individuals who excel with AI. Rather than using it solely to save time, they use it to explore, to question, and to imagine new solutions. Research consistently shows that those who approach AI with curiosity and experimentation generate more innovative ideas and avoid falling into routine use.10

Finally, these individuals balance depth and breadth. Experts validate subtle details; generalists connect insights across domains. Together, these qualities shift the individual’s role from producing to editing, supervising, and creating – moving routine execution to machines while allowing people to focus on judgement, collaboration, and impact. The result is not less human work, but more meaningful human work.

Playing to strengths

Co-intelligence is not magic. The whole reason it works is that humans and AI excel at different things. Where AI brings speed, scale, pattern recognition, and fluent generation, people bring judgement, context, values, imagination, and purpose. Put together thoughtfully, they amplify one another, and the results of this complementarity speak for themselves. In chess, just as in knowledge work, well-designed ‘centaur’ teams (a combination of human creativity and AI's computing power) routinely beat either humans or machines alone.11

Yet AI’s abilities are uneven, forming what AI researcher and Wharton professor Ethan Mollick calls a ‘jagged frontier’ where human oversight remains essential.12 But this unevenness is also what makes AI such a powerful accelerator of learning. By shortening the loop between action and reflection, it allows people to draft, critique, and iterate in minutes. Real understanding comes from this kind of experimentation, not from theory alone.13 In education, for example, AI-powered feedback is already making assessment more adaptive – and more reflective.14

Jagged frontier

– the uneven boundary between tasks AI performs well and tasks it struggles with. AI capabilities vary widely across domains, performing surprisingly well in some areas and unexpectedly weak in others.

At the same time, AI is reshaping how work is experienced. By taking on routine reporting, formatting, and information processing, it eliminates pseudo-work and frees up time for higher-value tasks, with the potential to improve a highly skilled worker’s performance by up to 40%, according to MIT.15 Not only that, it also empowers individuals to shift their energy from mechanical execution to strategic thinking, collaboration, and creative problem-solving.

As so often happens, what benefits individuals extends well beyond them. When team members share AI practices, they generate richer solutions and refine ideas more quickly. Organisations that embed co-intelligence into their ways of working build resilience: adapting faster to shocks, redesigning processes, and creating new forms of value. In supply chains, for example, AI enhances predictive capabilities, enabling firms to anticipate disruptions and respond more effectively.16

Where theory warns, practice listens

The benefits of co-intelligence are real, but they are far from automatic – or guaranteed for that matter. Four key principles apply:

The four key principles

The same conditions that make co-intelligence thrive – curiosity, shared leadership, psychological safety – are also the ones that protect against its risks.

The moving frontier

AI is moving at a pace that surprises everyone – even those working in the field. What feels like a utopia today will likely seem naive in only a few months. By then, it may have been replaced by practices and possibilities most of us cannot imagine yet. The frontier keeps shifting, and teams that now look futuristic will soon be seen as early pioneers on a much longer journey.

This rapid movement constantly changes benchmarks. Efficiency gains are quickly becoming the baseline, while the real transformation lies in reshaping culture, collaboration, and value creation. As such, it is important to remember that co-intelligence is not a fixed destination but a moving horizon, where the questions evolve as fast as the tools themselves.

Amid all this change, one principle remains constant: organisations that thrive are those that adopt AI in ways that are fit for humans and fit for the future. Success depends not just on technology, but on cultivating trust at every level – within the organisation, across teams, and in each individual. By keeping people at the centre and using technology as a partner, organisations can harness co-intelligence to keep pace with progress and shape how work will feel in the years ahead. Co-intelligence is not about making humans more like machines – it is about making work more human again. The question is: how will your organisation help to ensure that it is humans, not machines, who define the next frontier of work?

Sources:

1. Implement Consulting Group. (2024, July). Running an 8-week generative AI pilot. Implement Consulting Group. https://implementconsultinggro...

2. Prosci. (2023–2025). Change management research highlights. https://www.prosci.com/change-...

3. O’Reilly, C. A., & Tushman, M. L. (2004). The ambidextrous organization. Harvard Business Review. https://hbr.org/2004/04/the-am...

4. Implement Consulting Group. (2024, March). Autonomous AI agents. Implement Consulting Group. https://implementconsultinggro...

5. Nicolaides, V. C., LaPort, K. A., Chen, T. R., Tomassetti, A. J., Weis, E. J., Zaccaro, S. J., & Cortina, J. M. (2014). Shared leadership and team performance: A meta-analysis. The Leadership Quarterly, 25(5), 923–942. https://doi.org/10.1016/j.leaq...

6. Edmondson, A. (1999). Psychological safety and learning behavior in work teams. Administrative Science Quarterly, 44(2), 350–383. https://doi.org/10.2307/266699...

7. Logg, J. M., Minson, J. A., & Moore, D. A. (2019). Algorithm appreciation: People prefer algorithmic to human judgment. Organizational Behavior and Human Decision Processes, 151, 90–103. https://doi.org/10.1016/j.obhd...

8. Mollick, E. (2023). Co-Intelligence: Living and Working with AI. One Useful Thing. https://www.oneusefulthing.org

9. Wu, Q., Cormican, K., & Chen, G. (2021). A meta-analysis of shared leadership and team effectiveness. Journal of Business Research, 134, 302–318. https://doi.org/10.1016/j.jbus...

10. Brynjolfsson, E., Li, D., & Raymond, L. R. (2023). Generative AI at Work (NBER Working Paper No. 31161). National Bureau of Economic Research. https://www.nber.org/papers/w3...

11. Shoresh, D., & Loewenstein, Y. (2024, December 24). Modeling the centaur: Human-machine synergy in sequential decision making. arXiv. https://arxiv.org/abs/2412.185...

12. Mollick, E. (2025). Making AI Work: Leadership, Lab, and Crowd. One Useful Thing. https://www.oneusefulthing.org...

13. Mollick, E. (2023). Co-Intelligence: Living and Working with AI. One Useful Thing. https://www.oneusefulthing.org

14. U.S. Department of Education, Office of Educational Technology. (2023). Artificial intelligence and the future of teaching and learning: Insights and recommendations. U.S. Department of Education. https://www.ed.gov/sites/ed/fi...

15. Somers, M. (2023, October 19). How generative AI can boost highly skilled workers’ productivity. MIT Sloan School of Management. https://mitsloan.mit.edu/ideas...

16. Guo, X., Chen, Y., Xie, J., et al. (2025). Research on supply chain resilience mechanism of AI-enabled manufacturing enterprises based on organizational change perspective. Scientific Reports, 15, 31177. https://doi.org/10.1038/s41598...

17. Gerlich, M. (2025). AI tools in society: Impacts on cognitive offloading and the future of critical thinking. Societies, 15(1), 6. https://doi.org/10.3390/soc150...

18. Meincke, L., Nave, G., & Terwiesch, C. (2025). ChatGPT decreases idea diversity in brainstorming. Nature Human Behaviour, 9, 1107–1109. https://doi.org/10.1038/s41562...

19. Adanyin, A. (2024). AI-driven feedback loops in digital technologies: Psychological impacts on user behaviour and well-being. arXiv. https://doi.org/10.48550/arXiv...